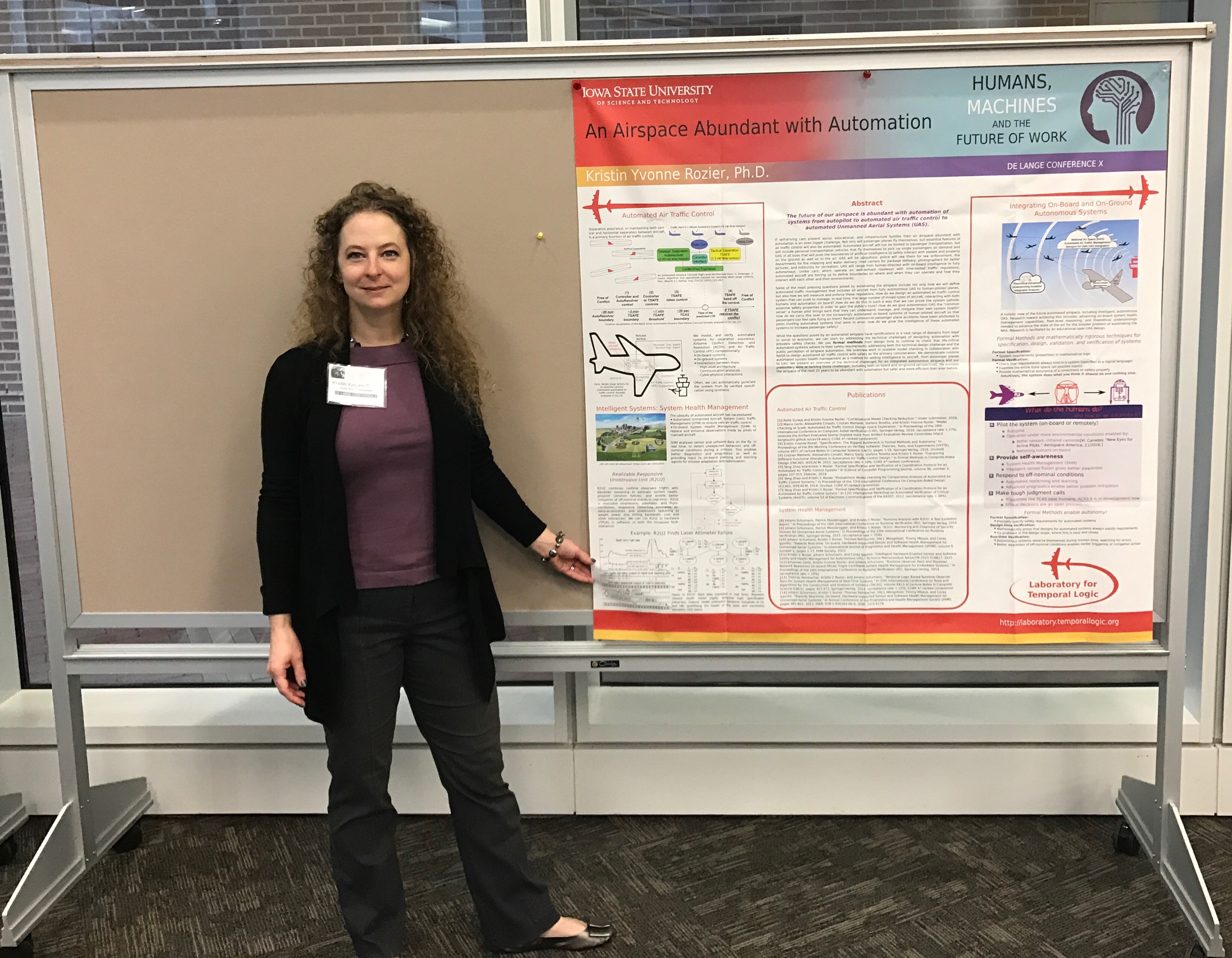

A survey poster on the research from the Laboratory for Temporal Logic De Lange Conference X on “Humans, Machines, and the Future of Work” showcased many of the lab’s current projects. The poster, titled “An Airspace Abundant with Automation” features current research on automated air traffic control for both manned and unmanned aircraft, intelligent sensor fusion for automated reasoning, system health management for Unmanned Aerial Systems (UAS) and beyond, and the formal techniques required for verification required for enabling autonomy of these safety-critical systems. Thanks to all of the students and collaborators of the Laboratory for Temporal Logic for your contributions!

Category Archives: Laboratory Collaborations

Patrick Moosbrugger Wins 2016 Kapsch Award

Congratulations to Ph.D. student Patrick Moosbrugger, recipient of the 2016 Kapsch Award for the best Masters thesis from the University of Applied Sciences, Technikum Wien! The Kapsch Award is given for the combination of the innovations and contributions of the MS thesis, outstanding academic performance of the student in coursework, and completing the MS degree exam with honors. Patrick was one of seven awardees across 18 MS degree programs. His MS thesis advances system health management for both safety and security requirements using R2U2.

The story is covered in several places:

- From the Austrian Press Agency (only German):

http://science.apa.at/rubrik/bildung/Kapsch_Award_fuer_die_besten_Masterthesen_von_der_FH_Technikum_Wien/SCI_20161104_SCI39411351832842748 - From the sponsor KAPSCH (pdf or webpage in English):

https://www.kapsch.net/getattachment/bce9c3cd-f746-448f-a0af-40b98d2a6716/kag_161104_pr

https://www.kapsch.net/KapschGroup/press/kapschgroup/kag_161104_pr - Additional pictures are here:

https://alumni.technikum-wien.at/fotos/kapsch-award-verleihung-2016

Filed under Awards, Laboratory Collaborations, News Features

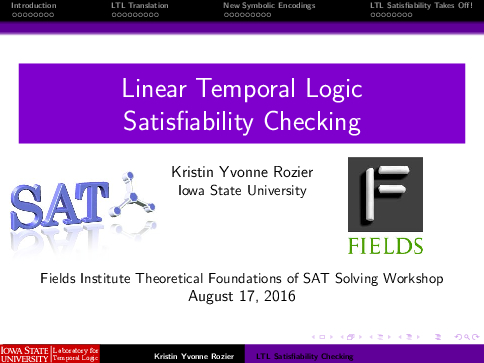

Theoretical Foundations of SATisfiability Solving

The Fields Institute for Research in Mathematical Sciences hosted an invitation-only Workshop on Theoretical Foundations of SAT Solving August 15-19, 2016. This workshop explored one of the most important problems in all of mathematics and computer science: the Boolean satisfiability (SAT) problem. The workshop focused on the state-of-the-art research developments in Boolean satisfiability, the quintessential NP-complete problem. While SAT is believed to be intractable in general, researchers have contributed dramatic developments in algorithmic techniques in solving SAT during the last two decades. Modern SAT solvers can now solve large real-world instances obtained from wide-ranging applications such as hardware and software verification, electronic design automation, artificial intelligence, bioinformatics, and operations research; these advances were featured in the workshop’s seminars, available online. The workshop was organized by eight of the most prominent researchers in SAT: Vijay Ganesh – University of Waterloo (Lead Organizer); Armin Biere – Johannes Kepler University; Sam Buss – University of California, San Diego; John Franco – University of Cincinatti; Holger Hoos – University of British Columbia; Jakob Nordström – KTH, Royal Institute of Technology; Alasdair Urquhart – University of Toronto; Moshe Vardi – Rice University.

The Fields Institute for Research in Mathematical Sciences hosted an invitation-only Workshop on Theoretical Foundations of SAT Solving August 15-19, 2016. This workshop explored one of the most important problems in all of mathematics and computer science: the Boolean satisfiability (SAT) problem. The workshop focused on the state-of-the-art research developments in Boolean satisfiability, the quintessential NP-complete problem. While SAT is believed to be intractable in general, researchers have contributed dramatic developments in algorithmic techniques in solving SAT during the last two decades. Modern SAT solvers can now solve large real-world instances obtained from wide-ranging applications such as hardware and software verification, electronic design automation, artificial intelligence, bioinformatics, and operations research; these advances were featured in the workshop’s seminars, available online. The workshop was organized by eight of the most prominent researchers in SAT: Vijay Ganesh – University of Waterloo (Lead Organizer); Armin Biere – Johannes Kepler University; Sam Buss – University of California, San Diego; John Franco – University of Cincinatti; Holger Hoos – University of British Columbia; Jakob Nordström – KTH, Royal Institute of Technology; Alasdair Urquhart – University of Toronto; Moshe Vardi – Rice University.

The related topic of Linear Temporal Logic Satisfiability builds on advances in SAT in many ways. Professor Kristin Yvonne Rozier contributed a talk on Linear Temporal Logic Satisfiability Checking exploring this relationship with aerospace domain examples.

Abstract:

One of the goals of this workshop is to identify SAT solving techniques essential to application settings. Aerospace operational concepts are often specified with timelines; we find the Boolean variables and propositional clauses of traditional propositional SAT laid out over time. Therefore, we capture these industrial requirements in Linear Temporal Logic; we must efficiently check LTL satisfiability in order to assure correctness of these requirements before we use them for further analysis, such as model checking or runtime monitoring. We argue that to prevent introducing design or verification errors, it is crucial to test specifications for satisfiability.

We have established LTL satisfiability checking as a sanity check: each system requirement, its negation, and the set of all requirements should be checked for satisfiability before being utilized for other tasks. We demonstrated that LTL satisfiability checking reduces to model checking, and developed new encodings that performed significantly, sometimes exponentially, better than was previously possible. Others then built on our work to create new stand-alone tools for LTL Satisfiability. However, the state of the art leaves many open questions for future applications of LTL satisfiability.

Slides for this presentation on Linear Temporal Logic Satisfiability Checking are available online.

Filed under Laboratory Collaborations, Research Talks

Parallella flies with NASA (and the Laboratory for Temporal Logic)

Parallella has found our research and featured us on their website with the headline Parallella Flies with NASA!

Parallella has featured our NASA Technical Memorandum::

- Kristin Yvonne Rozier, Johann Schumann, and Corey Ippolito. “Intelligent Hardware-Enabled Sensor and Software Safety and Health Management for Autonomous UAS.” Technical Memorandum NASA/TM-2015-218817, May, 2015. PDF BibTeX

However, the Parallella FPGA is actually featured in several of our publications, including:

- Johann Schumann, Patrick Moosbrugger, and Kristin Y. Rozier. “R2U2: Monitoring and Diagnosis of Security Threats for Unmanned Aerial Systems.” In Proceedings of the 15th International Conference on Runtime Verification (RV15), Springer-Verlag, Vienna, Austria, September 22–25, 2015. PDF BibTeX

- Johann Schumann, Kristin Y. Rozier, Thomas Reinbacher, Ole J. Mengshoel, Timmy Mbaya, and Corey Ippolito. “Towards Real-time, On-board, Hardware-supported Sensor and Software Health Management for Unmanned Aerial Systems.” In International Journal of Prognostics and Health Management (IJPHM), volume 6, number 1, pages 1–27, PHM Society, June, 2015. PDF BibTeX

- Johannes Geist, Kristin Yvonne Rozier, and Johann Schumann. “Runtime Observer Pairs and Bayesian Network Reasoners On-board FPGAs: Flight-Certifiable System Health Management for Embedded Systems.” In 14th International Conference on Runtime Verification (RV14), Springer-Verlag, Toronto, Canada, September 22-25, 2014. PDF (Proceedings version: PDF) BibTeX Experiments/Downloads

- Thomas Reinbacher, Kristin Y. Rozier, and Johann Schumann. “Temporal-Logic Based Runtime Observer Pairs for System Health Management of Real-Time Systems.” In 20th International Conference on Tools and Algorithms for the Construction and Analysis of Systems (TACAS), volume 8413 of Lecture Notes in Computer Science (LNCS), pages 357–372, Springer-Verlag, Grenoble, France, 5-13 April 2014. PDF (Proceedings version: PDF) BibTeX Tools and Simulation Traces

- Johann Schumann, Kristin Y. Rozier, Thomas Reinbacher, Ole J. Mengshoel, Timmy Mbaya, and Corey Ippolito. “Towards Real-time, On-board, Hardware-supported Sensor and Software Health Management for Unmanned Aerial Systems.” In 2013 Annual Conference of the Prognostics and Health Management Society (PHM2013), pages 381–401. October, 2013. PDF BibTeX

Filed under Laboratory Collaborations, News Features

Formal Methods and Data Science at the World Bank!

Professor Kristin Yvonne Rozier teamed up with Dr. Jeff Alstott of MIT and Singapore University of Technology to give a forward-looking presentation on “Company Network Analysis” for the World Bank Group’s “Teams Working on Corruption, Procurement, & Data Analysis: a ‘Hands-on Workshop’ on progress and future planning on using data to identify and address corruption in procurement” in Washington, D.C. on October 5, 2015. The presentation highlighted the analysis completed at the Data Science for Social Good (DSSG) program at the University of Chicago in the years 2014-2015 and a vision for increasing precision and effectiveness of the analysis using a formal methods solution.

Kristin Y. Rozier presenting a Formal Methods vision for the future of World Bank procurement efforts to better combat corruption.

Members of the teams from two years of Data Science for Social Good were reunited with their World Bank collaborators at the meeting. Presenters included Eric Rozier and Jeff Alstott from the 2014 DSSG fellowship and Emily Grace, Elissa Redmiles, and Kristin Y. Rozier from the 2015 fellowship, and long-time DSSG Research Fellow Matt Gee.

Filed under Laboratory Collaborations, Research Talks

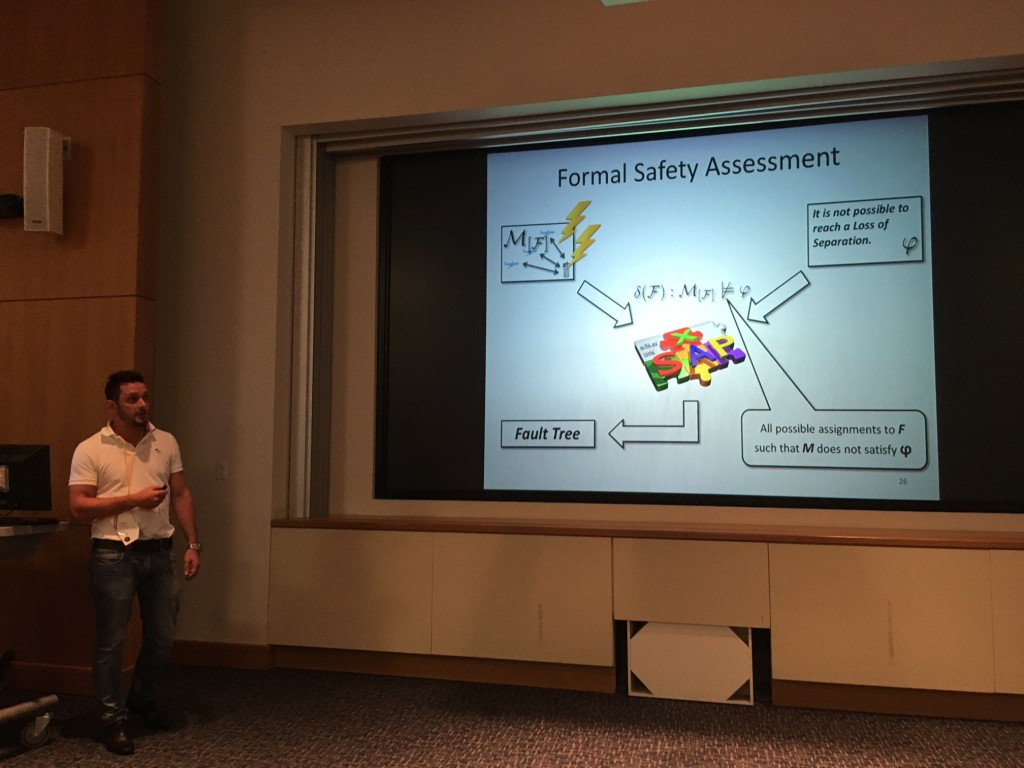

Cristian Mattarei presents at FMCAD 2015: “Comparing Different Functional Allocations in Automated Air Traffic Control Design“

PhD candidate Cristian Mattarei gave a wonderful presentation of our work on “Comparing Different Functional Allocations in Automated Air Traffic Control Design” by Cristian Mattarei, Alessandro Cimatti, Marco Gario, Stefano Tonetta, and Kristin Y. Rozier today at Formal Methods in Computer-Aided Design (FMCAD) 2015 at the University of Texas at Austin. Our paper stems from a fruitful collaboration with the Embedded Systems Laboratory at the Fondazione Bruno Kessler (FBK) in Trento, Italy.

The slides are available here.

ABSTRACT:

In the early phases of the design of safety-critical

systems, we need the ability to analyze the safety of different

design solutions, comparing how different functional allocations

impact the overall reliability of the system. To achieve this

goal, we can apply formal techniques ranging from model

checking to model-based fault-tree analysis. Using the results

of the verification and safety analysis, we can compare different

solutions and provide the domain experts with information on

the strengths and weaknesses of each solution.

In this paper, we consider NASA’s early designs and functional

allocation hypotheses for the next air traffic control system for

the United States. In particular, we consider how the allocation of

separation assurance capabilities and the required communication

between agents affects the safety of the overall system. Due

to the high level of details, we need to abstract the domain while

retaining all of the key properties of NASA’s designs. We present

the modeling approach and verification process that we adopted.

Finally, we discuss the results of the analysis when comparing

different configurations including both new, self-separating and

traditional, ground-separated aircraft.

Dagstuhl Seminar: Theory and Practice of SAT Solving

Dagstuhl Seminar 15171 on the “Theory and Practice of SAT Solving” tackled one of the most fundamental computational questions: proving logic formulas. The seminar brought together the fields of SAT solving and proof complexity to explore questions of both theoretical and practical interest in designing real-world complex systems, citing examples from hardware and software verification, electronic design automation, artificial intelligence research, cryptography, bioinformatics, operations research, and railway signalling systems. The seminar explored this interdisciplinary space, considering questions like, “Can Proof Complexity Shed Light on Crucial SAT Solving Issues?”

Professor Kristin Yvonne Rozier contributed a seminar on “Linear Temporal Logic Satisfiability Checking,”

Abstract:

Formal verification techniques are growing increasingly vital for the development of safety-critical software and hardware. Techniques such as requirements-based design and model checking have been successfully used to verify systems for air traffic control, airplane separation assurance, autopilots, logic designs, medical devices, and other functions that ensure human safety. Formal behavioral specifications written early in the system-design process and communicated across all design phases increase the efficiency, consistency, and quality of the system under development. We argue that to prevent introducing design or verification errors, it is crucial to test specifications for satisfiability.

In 2007, we established LTL satisfiability checking as a sanity check: each system requirement, its negation, and the set of all requirements should be checked for satisfiability before being utilized for other tasks, such as property-based system design or system verification via model checking. We demonstrated that LTL satisfiability checking reduces to model checking; an extensive experimental evaluation proved that for LTL satisfiability checking, the symbolic approach is superior to the explicit approach. However, the performance of the symbolic approach critically depends on the encoding of the formula. Since 1994, there had been essentially no new progress in encoding LTL formulas as symbolic automata for BDD-based analysis. We introduced a set of 30 symbolic automata encodings, demonstrating that a portfolio approach utilizing these encodings translates to significant, sometimes exponential, improvement over the standard encoding for symbolic LTL satisfiability checking. In recent years, LTL satisfiability checking has taken off, with others inventing exciting new methods to scale with increasingly complex systems. We revisit the benchmarks for LTL satisfiability checking that have become the de facto industry standard and examine the encoding methods that have led to leaps in performance. We highlight the past and present, and look to the future of LTL satisfiability checking, a sanity check that now has an established place in the development cycles of safety-critical systems.

Filed under Laboratory Collaborations, Research Talks

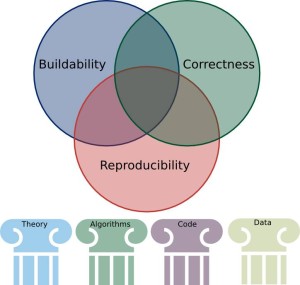

Reproducibility, Correctness, and Buildability: the Three Principles for Ethical Public Dissemination of Computer Science and Engineering Research

Our paper has been published in IEEE Ethics 2014! This is the first collaboration with the Trustworthy Data Engineering Lab: Reproducibility, Correctness, and Buildability: the Three Principles for Ethical Public Dissemination of Computer Science and Engineering Research.

NASA Formal Methods Symposium 2014

Dr. Kristin Y. Rozier (NASA ARC), in collaboration with Dr. Julia Badger (NASA JSC), served as Programme Committee chairs of the sixth annual NASA Formal Methods Symposium (NFM), held April 29 through May 1, 2014. Dr. Michael Hinchey (NASA GSFC) served as General Chair. NFM 2014 was held in the Gilruth Center at NASA JSC in Houston, Texas; JSC gave NFM a warm welcome, sending Steve Stich, Deputy Director of Engineering, to deliver a welcome address on the center’s behalf.

Dr. Kristin Y. Rozier (NASA ARC), in collaboration with Dr. Julia Badger (NASA JSC), served as Programme Committee chairs of the sixth annual NASA Formal Methods Symposium (NFM), held April 29 through May 1, 2014. Dr. Michael Hinchey (NASA GSFC) served as General Chair. NFM 2014 was held in the Gilruth Center at NASA JSC in Houston, Texas; JSC gave NFM a warm welcome, sending Steve Stich, Deputy Director of Engineering, to deliver a welcome address on the center’s behalf.

NFM is a selective, fully peer-reviewed publication venue that attracts high-quality original research. The symposium is the result of a collaboration between formal methods researchers at six NASA centers: ARC, JPL, LaRC, JSC, GSFC, and AFRC.

NFM 2014 received 107 abstract submissions, 83 of which resulted in full papers: in total 50 regular papers describing fully developed work and complete results; and 33 short papers describing tools, experience reports, or descriptions of work in progress with preliminary results. Out of these, 20 regular papers and 9 short papers were accepted, giving an overall acceptance rate of 35% (a 40% rate for regular papers and a 27% rate for short papers). All submissions went through a rigorous reviewing process, where each paper was read by at least three reviewers.

In addition to the refereed papers, the symposium featured three invited talks and a panel feature. NASA provided a special guest talk on “NASA Future Challenges in Formal Methods,” delivered by Bill McAllister, Chief, Safety and Mission Assurance, International Space Station Safety Panels, Avionics and Software Branch, NASA Johnson Space Center. Professor Lawrence C. Paulson from the University of Cambridge gave a keynote talk on “Theorem Proving and the Real Numbers: Overview and Challenges.” Professor Moshe Y. Vardi from Rice University gave a keynote talk on “Compositional Temporal Synthesis.”

The NFM 2014 panel feature, titled “Future Directions of Specifications for Formal Methods,” featured panelists Matt Dwyer of the University of Nebraska, Hadas Kress-Gazit of Cornell University, and Moshe Y. Vardi of Rice University. Specifications are required for all applications of formal methods yet extracting specifications for real-life safety critical systems often proves to be a huge bottleneck or even an insurmountable hurdle to the application of formal methods in practice. This is the state for safety-critical systems today and as these systems grow more complex, more pervasive, and more powerful in the future, there is not a clear path even for maintaining the bleak status quo. Therefore, NFM 2014 highlighted this issue in the home of an important critical system, the Mission Control Center of NASA’s most famous critical systems, and asked our panelists where we can go from here.

NFM 2014 was attended by over a hundred formal methods researchers from around the world. Springer published the NFM symposium proceedings as a volume in the Lecture Notes of Computer Science (LNCS) series: http://link.springer.com/book/10.1007/978-3-319-06200-6.

The international program committee (PC) for NFM 2014 consisted of some of the most prominent researchers in the field of formal verification. In total, the PC consisted of 44 members from NASA, academia, industry, and other government labs who were aided in completing the extensive peer reviews by 53 additional expert external reviewers. The PC included ARC team members Guillaume Brat, Ewen Denney, Kristin Y. Rozier, Neha Rungta, Johann Schumann, Oksana Tkachuk, and Arnaud Venet. Additional reviewers included ARC team members Ganesh Pai and Sarah Thompson.

Plans are already underway for NFM 2015, which will be hosted by NASA JPL.

For more information, including the accepted papers, see the official NFM website: http://www.NASAFormalMethods.org

BACKGROUND:

The widespread use and increasing complexity of mission- and safety-critical systems require advanced techniques that address their specification, verification, validation, and certification requirements. The NASA Formal Methods Symposium is a forum for theoreticians and practitioners from academia, industry, and government, with the goals of identifying challenges and providing solutions to achieving assurance in mission- and safety-critical systems. Within NASA such systems include autonomous robots, separation assurance algorithms for aircraft, Next Generation Air Transportation (NextGen), and autonomous rendezvous and docking for spacecraft. Moreover, emerging paradigms such as property-based design, code generation, and safety cases are bringing with them new challenges and opportunities. The focus of the symposium was on formal techniques, their theory, current capabilities, and limitations, as well as their application to aerospace, robotics, and other safety-critical systems in all design life-cycle stages. We encouraged work on cross-cutting approaches marrying formal verification techniques with advances in safety-critical system development, such as requirements generation, analysis of aerospace operational concepts, and formal methods integrated in early design stages carrying throughout system development.

The NASA Formal Methods Symposium is an annual event that was created to highlight the state of the art in formal methods, both in theory and in practice. The series is a spinoff of the original Langley Formal Methods Workshop (LFM). LFM was held six times in 1990, 1992, 1995, 1997, 2000, and 2008 near NASA Langley in Virginia, USA. In 2009 the first NASA Formal Methods Symposium was organized by NASA Ames Research Center in Moffett Field, CA. In 2010, the Symposium was organized by NASA Langley Research Center and NASA Goddard Space Flight Center, and held at NASA Headquarters in Washington, D.C. The third NFM symposium was organized by the Laboratory for Reliable Software at the NASA Jet Propulsion Laboratory/California Institute of Technology, and held in Pasadena, CA in 2011. NFM returned to NASA Langley Research Center in 2012; the Symposium was organized by the NASA Langley Formal Methods Group in nearby Norfolk, Virginia. NASA Ames Research Center organized and hosted NFM 2013, the fifth Symposium in the series. This year’s Symposium was organized via a collaboration between NASA Goddard Space Flight Center, NASA Johnson Space Center, and NASA Ames Research Center.

The topics covered by NFM 2014 include but are not limited to: model checking; theorem proving; static analysis; model-based development; runtime monitoring; formal approaches to fault tolerance; applications of formal methods to aerospace systems; formal analysis of cyber-physical systems, including hybrid and embedded systems; formal methods in systems engineering, modeling, requirements, and specifications; requirements generation, specification debugging, formal validation of specifications; use of formal methods in safety cases; use of formal methods in human-machine interaction analysis; formal methods for parallel hardware implementations; use of formal methods in automated software engineering and testing; correct-by-design, design for verification, and property-based design techniques; techniques and algorithms for scaling formal methods, e.g., abstraction and symbolic methods, compositional techniques, parallel and distributed techniques; application of formal methods to emerging technologies.

Women and the Flat Connected World

The 2009 Grace Hopper Celebration (GHC) of Women in Computing Conference features our panel on “Women and the Flat Connected World.” The panel features Meenakshi Kaul-Basu (Sun Microsystems), Bev Crair (Quantum Corporation), Claudia Galván (Microsoft Corporation), Kristin Yvonne Rozier (NASA), Lydia Ash (Google), Radha Ratnaparkhi (IBM), and Sumitha Prashanth (Sun Microsystems). See the story: GHC09: Women in the Flat Connected World.”,/A> GHC is in Tucson, Arizona, September 29-October 3, 2009.

The 2009 Grace Hopper Celebration (GHC) of Women in Computing Conference features our panel on “Women and the Flat Connected World.” The panel features Meenakshi Kaul-Basu (Sun Microsystems), Bev Crair (Quantum Corporation), Claudia Galván (Microsoft Corporation), Kristin Yvonne Rozier (NASA), Lydia Ash (Google), Radha Ratnaparkhi (IBM), and Sumitha Prashanth (Sun Microsystems). See the story: GHC09: Women in the Flat Connected World.”,/A> GHC is in Tucson, Arizona, September 29-October 3, 2009.